Artificial Intelligence Solutions and Services

Infobell helps enterprises identify high-impact AI and ML use cases, evaluate CPU and GPU architectures for performance, and build production-grade Generative AI/ML applications, Agentic-AI, and RAG applications. We deliver AI solutions across industries, including IT operations, Telco, Retail, Healthcare, and Real Estate. From data lifecycle management to performance benchmarking for AI infrastructure and LLM systems, we develop scalable tools and implementation frameworks for various AI/ML use cases like natural language processing, computer vision, and multimodal AI workloads, partnering closely with industry leaders such as NVIDIA, AMD, and Intel.

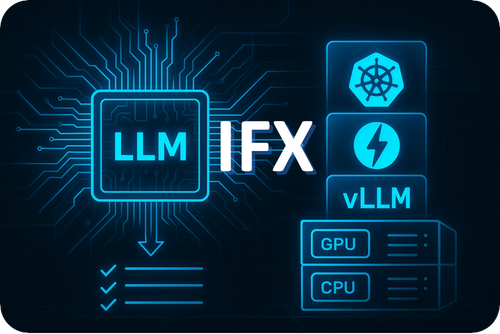

Introducing Infobell Inference Framework eXpress (IFX)

Inference Framework eXpress is a scalable, open-source LLM inference stack engineered for performance, transparency, and enterprise readiness.

NVIDIA Powered AI Solutions by Infobell

NVIDIA Powered AI Solutions by Infobell

Infobell is a trusted NVIDIA partner helping enterprises identify high-impact AI and ML use cases, and build and deploy GenAI applications and chatbots using the NVIDIA accelerated computing stack — including GPUs, CUDA, TensorRT, and NVIDIA NIMs. We specialize in performance characterization for LLMs, computer vision, and agentic AI workflows across sectors like IT operations, retail, healthcare, and real estate. From data pipeline design to scalable deployment, Infobell leverages the full NVIDIA ecosystem to deliver state-of-the-art AI solutions at scale. Infobell provides end-to-end product engineering and solution implementation services — from use-case discovery, design, architecture, and AI application development, to model tuning, deployment, and operations. Learn More

AMD Powered AI Solutions by Infobell

AMD Powered AI Solutions by Infobell

Infobell is an AMD engineering and solutions partner helping enterprises unlock GenAI and ML opportunities with AMD’s open, flexible infrastructure — including Instinct GPUs, EPYC CPUs, and the ROCm software stack. We specialize in performance benchmarking, LLM inference frameworks (like IFX), and customized AI solutions such as chatbots, RAG pipelines, and domain-specific model tuning. Sectors served include retail, healthcare, manufacturing, and energy. From use-case discovery and data pipeline design to model development, fine-tuning, deployment, and ongoing operations, Infobell delivers full-stack AI services to accelerate adoption on AMD’s open ecosystem. Learn More

Intel Powered AI Solutions by Infobell

Intel Powered AI Solutions by Infobell

Infobell partners with Intel to deliver robust enterprise AI and ML solutions leveraging Intel Xeon, Gaudi processors, and the OpenVINO™ toolkit. We help customers identify use cases, design architecture, and deploy GenAI applications like internal copilots, RAG pipelines, and industry-specific LLMs — with a focus on performance tuning, cost-efficiency, and secure deployment. Infobell’s expertise spans workload orchestration, vector databases, and optimization of AI workloads for regulated industries like finance, industrial IoT, and telco. We offer end-to-end product engineering and solution implementation services, from design to model development, tuning, deployment, and operations on Intel’s open AI stack. Learn More

Enterprise AI Solutions

DocPrep for RAG

DocPrep for RAG

ConvoGene

ConvoGene

Transcribe

Transcribe

VAST

VAST

SmartE

SmartE

AI & Cloud Intelligence

EchoSwift – LLM Performance Tool

EchoSwift – LLM Performance Tool

Carbon Calculator

Carbon Calculator

Cloud Control

Cloud Control

Cloud Migration Advisor

Cloud Migration Advisor

AgenticFlow - Agentic AI Solutions

AgenticFlow empowers enterprises to seamlessly design, develop, and deploy intelligent AI agents and end-to-end workflows. From orchestrating autonomous decision-making to integrating multi-agent systems into real-world applications, AgenticFlow provides a unified foundation for building adaptive, scalable AI solutions. Leveraging modular architecture, no-code/low-code interfaces, and enterprise-grade orchestration, it accelerates AI adoption across diverse use cases—enhancing productivity, automation, and responsiveness at scale.

AI Agents – Automate queries with human interface

AI Infrastructure and Software Development Services

Gen AI offerings on-prem / Hybrid

On Prem K8S – Red Hat OpenShift, VMware Private AI, Nutanix GPT-in-a-Box

Cloud – EKS, GKE etc

Showcase near-real scaling, LLMOps, deployment on CPU, GPU (Nvidia and AMD)

Performance & Architecture Services

TCO analysis, Sizing, Scaling, Reference Architectures, Benchmarking

- TCO Analysis for customer solutions

- Sizing and Reference Architecture for customer use cases

- Benchmarking-as-a-Service

- Scale test and identify architectural bottlenecks for production deployments Accelerate AI implementations – Ease of Adoption

Accelerate AI Go To Market – Ease of Adoption

AI Apps Research and Development

Research and Investigations on models, training

Software and environment bring up and deploy for analysis

AI Performance and scale tests

Run Experiments

Inferencing

Training

Comparison of models

Run Benchmarks

Build benchmarks (different types of infra / models for comparative analysis) e.g. Ecoswift

Generate new datasets

Expertise in running MLPerf, TPC-AI, HPC benchmarks on GPU and inference benchmarks for LLMs

Fine-tune, analyze, debug

Build Guides

Simple to use documents for customers to build their solutions

Reference Architecture

Recommended architectures for various solutions which are tried and tested

Infra – Sizing and TCO Analysis

-

Sizing reference architectures for different configurations of GPU, CPU, scale and performance

-

TCO analysis for customers

Live Demo & Templates

DIY templates and live demos for various verticals and use cases such as Chatbots, Co-pilots

GTM Demos

Support / Drive Customer PoCs

Successful PoC translate to customer adoption and we can drive or provide 24/7 support for customer PoCs